ArtLens

HackUDC 2025 Edition

Have you ever visited a museum and, without a guide, felt a little lost—standing in front of incredible works of art, but not really knowing what you’re looking at?

That was the motivation behind ArtLens, a mobile app we built during HackUDC 2025 to bring context, story, and voice to any museum visit. With just your phone, ArtLens can recognize a painting (or sculpture), describe it in detail, adapt it to your profile, and even read it aloud.

🧠 Motivation

Museums are full of stories—but if you’re not an art historian or don’t have a guided tour, those stories often stay hidden. We wanted to change that, using AI to create a more inclusive and personalized museum experience.

ArtLens tries to answer the questions many of us have when visiting a museum:

What am I looking at?

Why is this artwork important?

What’s the story behind it?

📱 How it works

The app has three simple screens that guide the whole experience:

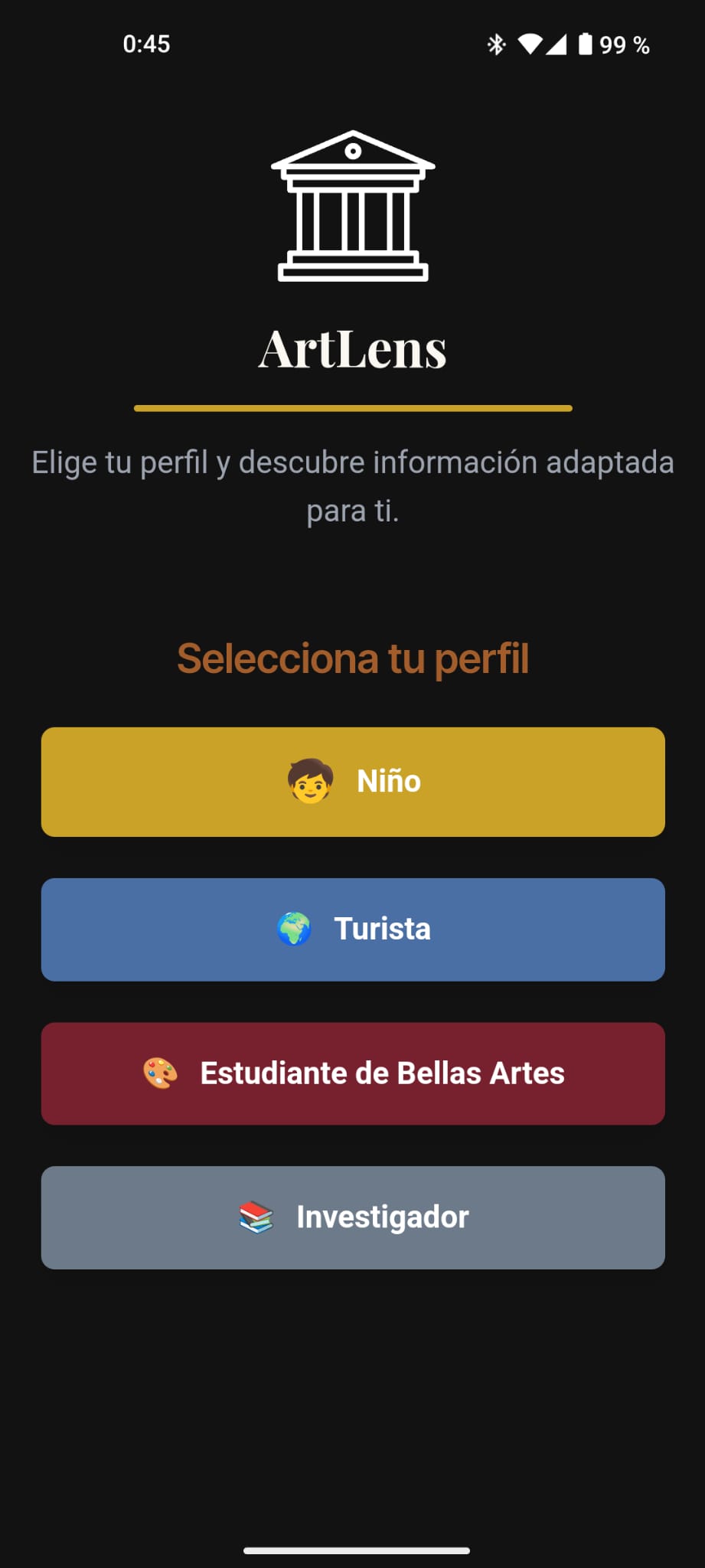

① Home – Choose your visitor profile (e.g., child, tourist, art student...)

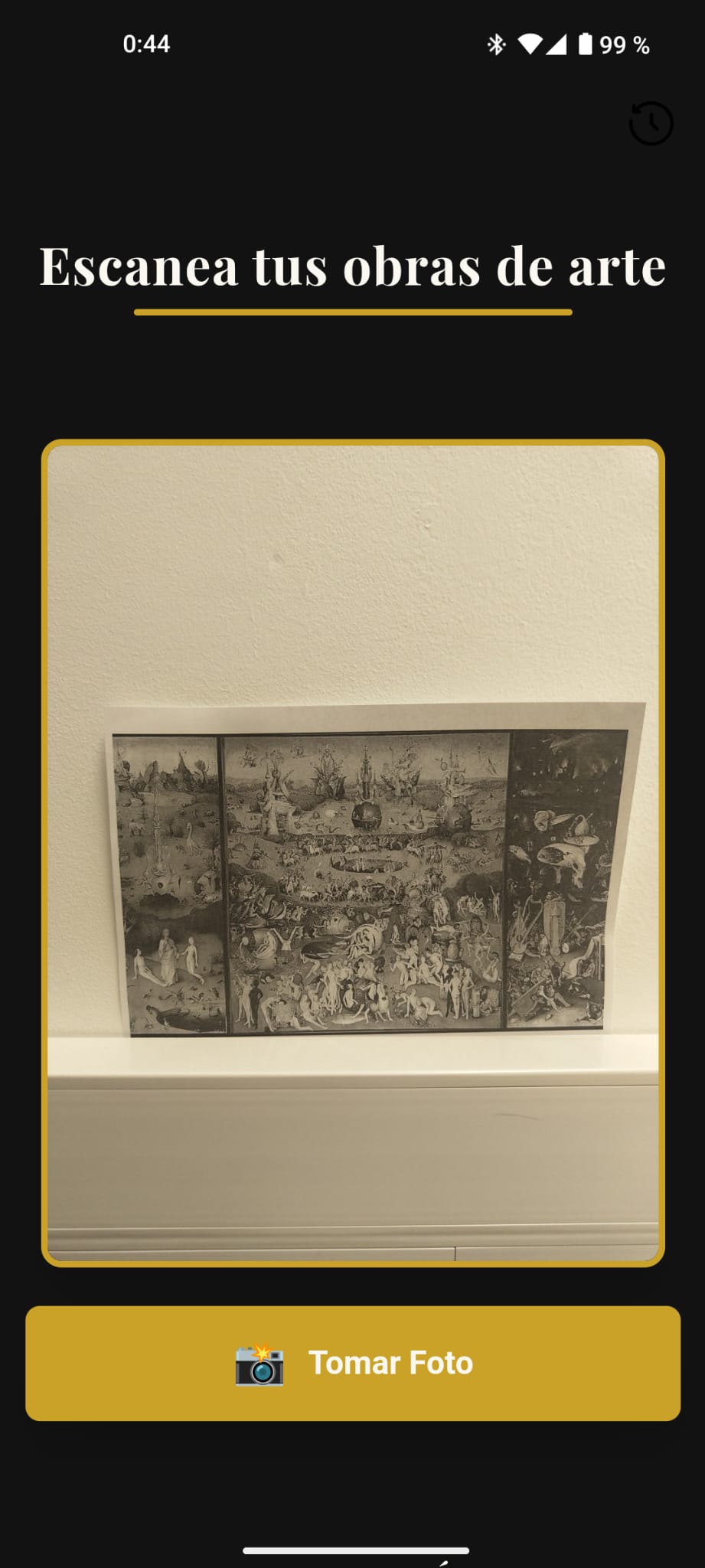

② Scan – Take a photo of an artwork directly in the museum

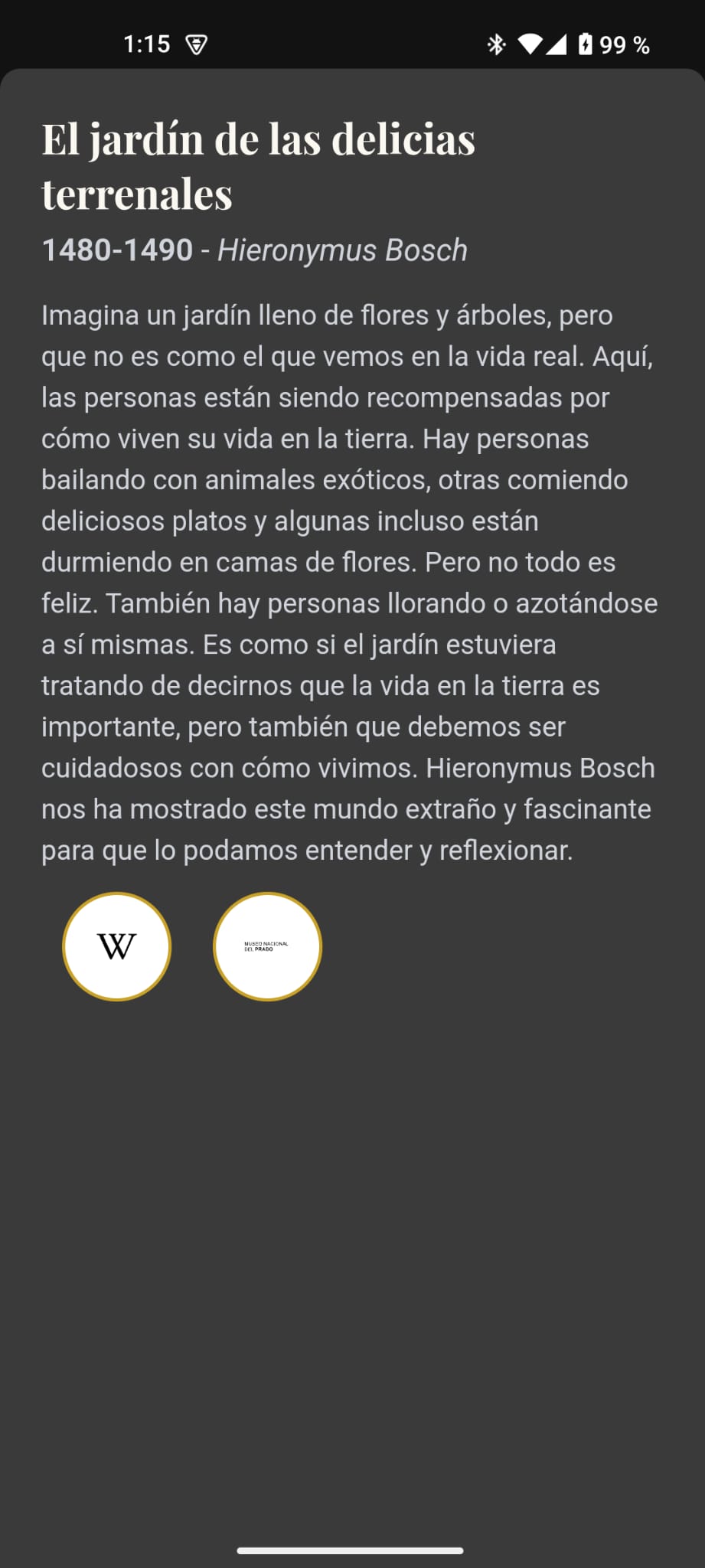

③ Result – Get a personalized description, read out loud, with a link to explore more

🛠️ Under the hood

I worked on the backend and the AI system, and this project was full of firsts for me:

- ✅ First time using CLIP (by OpenAI) for visual understanding

- ✅ First time working with Milvus, an embedding database for fast similarity search

- ✅ First time building real-time text-to-speech, using the super simple

gTTSlibrary

To ensure privacy and keep things lightweight, we also ran a local LLM (LLaMA 3.2 via Ollama) on-device. That way, the entire process could run offline—an important feature in a place like a museum.

🔄 Full pipeline

Here’s how the system worked end-to-end:

- The visitor selects a profile (e.g., child, tourist…)

- Takes a photo of the artwork

- We extract image embeddings using CLIP

- We search those embeddings in a Milvus database of artworks (focused on Museo del Prado)

- Once we identify the artwork, we generate a prompt for the local LLM, tailored to the visitor’s profile

- The LLM creates a natural, friendly explanation of the piece

- We convert that text into speech using

gTTS, providing an instant, personalized audio guide

🖼️ Custom dataset from Museo del Prado

One big challenge was the lack of existing datasets for the Museo del Prado—especially in Spanish. So we built our own:

scraping, curating, and annotating a small dataset of iconic artworks. It was a great exercise in data wrangling and making AI more culturally grounded.

🎉 Final thoughts

We built ArtLens in just 36 hours during the HackUDC 2025 hackathon.

We didn’t sleep much, but we learned a lot—and somehow, it all worked.

Winning the Best Mobile App award (thanks to NomaSystems) was the cherry on top.

But more than that, we were proud to create something that used AI not for automation or speed, but for curiosity and cultural connection.

Because sometimes, all you need is one simple question to unlock a masterpiece:

“Tell me what I’m looking at.”